Process Satellite Images in Parallel

Transform 3,111 satellite images in 5 minutes for $0.70

Introduction#

This example demonstrates how to process thousands of satellite images in parallel using cloud computing. By leveraging Coiled Batch, you can easily run non-Python tasks at scale without complex cloud infrastructure setup.

The example processes 3,111 satellite images in just 5 minutes, costing around $0.70 total. You can run it right now with minimal setup. All you need is:

pip install coiled

pip install coiled

Full code#

Save this script as reproject.sh and you're ready to go. If you're new to Coiled, this will run for free on our account.

#!/usr/bin/env bash

#COILED n-tasks 3111

#COILED max-workers 100

#COILED region us-west-2

#COILED memory 8 GiB

#COILED container ghcr.io/osgeo/gdal

#COILED forward-aws-credentials True

# Install aws CLI

if [ ! "$(which aws)" ]; then

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip -qq awscliv2.zip

./aws/install

fi

# Download file to be processed

filename=$(aws s3 ls --no-sign-request --recursive s3://sentinel-cogs/sentinel-s2-l2a-cogs/54/E/XR/ | \

grep ".tif" | \

awk '{print $4}' | \

awk "NR==$(($COILED_BATCH_TASK_ID + 1))")

aws s3 cp --no-sign-request s3://sentinel-cogs/$filename in.tif

# Reproject GeoTIFF

gdalwarp -t_srs EPSG:4326 in.tif out.tif

# Move result to processed bucket

aws s3 mv out.tif s3://oss-scratch-space/sentinel-reprojected/$filename

#!/usr/bin/env bash

#COILED n-tasks 3111

#COILED max-workers 100

#COILED region us-west-2

#COILED memory 8 GiB

#COILED container ghcr.io/osgeo/gdal

#COILED forward-aws-credentials True

# Install aws CLI

if [ ! "$(which aws)" ]; then

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip -qq awscliv2.zip

./aws/install

fi

# Download file to be processed

filename=$(aws s3 ls --no-sign-request --recursive s3://sentinel-cogs/sentinel-s2-l2a-cogs/54/E/XR/ | \

grep ".tif" | \

awk '{print $4}' | \

awk "NR==$(($COILED_BATCH_TASK_ID + 1))")

aws s3 cp --no-sign-request s3://sentinel-cogs/$filename in.tif

# Reproject GeoTIFF

gdalwarp -t_srs EPSG:4326 in.tif out.tif

# Move result to processed bucket

aws s3 mv out.tif s3://oss-scratch-space/sentinel-reprojected/$filename

After saving the script, just run one command to execute it on the cloud:

coiled batch run reproject.sh

coiled batch run reproject.sh

Now let's explore how this works and why it's so efficient.

The Problem#

Geospatial data processing often involves transforming large volumes of satellite imagery. In this example, we need to reproject 3,111 Sentinel-2 satellite images hosted on AWS S3. These are high-resolution images of Earth's surface that need to be converted from their native coordinate system to a standard projection (EPSG:4326).

This task presents several challenges:

- Volume: Processing 3,111 files serially would take days

- Location: The data is hosted in AWS, so downloading it locally would be slow and expensive

- Software Dependencies: We need GDAL, a specialized C++ library for geospatial processing

- Resource Intensity: Each file requires significant memory and CPU for processing

Running this job serially on a laptop would take over 2 days, and setting up cloud infrastructure manually through AWS Batch, GCP Cloud Run, or Azure Batch is complex and time-consuming.

Setting Up the Cloud Hardware#

We'll use Coiled Batch to run our processing on cloud resources that are co-located with the data. The entire hardware configuration is specified using special #COILED comments in our script:

#COILED n-tasks 3111 # Process 3,111 files

#COILED max-workers 100 # Use 100 VMs in parallel

#COILED region us-west-2 # Run near the data

#COILED memory 8 GiB # Small VMs are sufficient

#COILED container ghcr.io/osgeo/gdal # Use GDAL container

#COILED forward-aws-credentials True # Access AWS securely

#COILED n-tasks 3111 # Process 3,111 files

#COILED max-workers 100 # Use 100 VMs in parallel

#COILED region us-west-2 # Run near the data

#COILED memory 8 GiB # Small VMs are sufficient

#COILED container ghcr.io/osgeo/gdal # Use GDAL container

#COILED forward-aws-credentials True # Access AWS securely

These directives tell Coiled to:

- Create 100 VMs in the US-West-2 region (same region as the data)

- Provision each VM with 8 GB of memory

- Use a ready-made Docker container with GDAL pre-installed

- Securely forward AWS credentials to access private buckets

- Run a total of 3,111 tasks distributed across these VMs

This configuration provides an optimal balance between performance and cost - more VMs would increase parallelism but might not be cost-effective for this particular workload.

Parallelization Strategy#

The key to efficient processing is the COILED_BATCH_TASK_ID environment variable. Coiled automatically sets this variable to a unique value (0, 1, 2, ..., 3110) for each task. We use this ID to determine which file each task should process:

filename=$(aws s3 ls --no-sign-request --recursive s3://sentinel-cogs/sentinel-s2-l2a-cogs/54/E/XR/ | \

grep ".tif" | \

awk '{print $4}' | \

awk "NR==$(($COILED_BATCH_TASK_ID + 1))")

filename=$(aws s3 ls --no-sign-request --recursive s3://sentinel-cogs/sentinel-s2-l2a-cogs/54/E/XR/ | \

grep ".tif" | \

awk '{print $4}' | \

awk "NR==$(($COILED_BATCH_TASK_ID + 1))")

This line tells each task to:

- List all the

.tiffiles in the bucket - Select the file at the position matching the task ID

- Process only that specific file

Each task then downloads its assigned file, processes it using GDAL's gdalwarp tool, and uploads the result to an output bucket.

Results#

The batch job completed processing all 3,111 images in approximately 5 minutes at a total cost of about $0.70. This represents a remarkable 600x speedup compared to the serial approach.

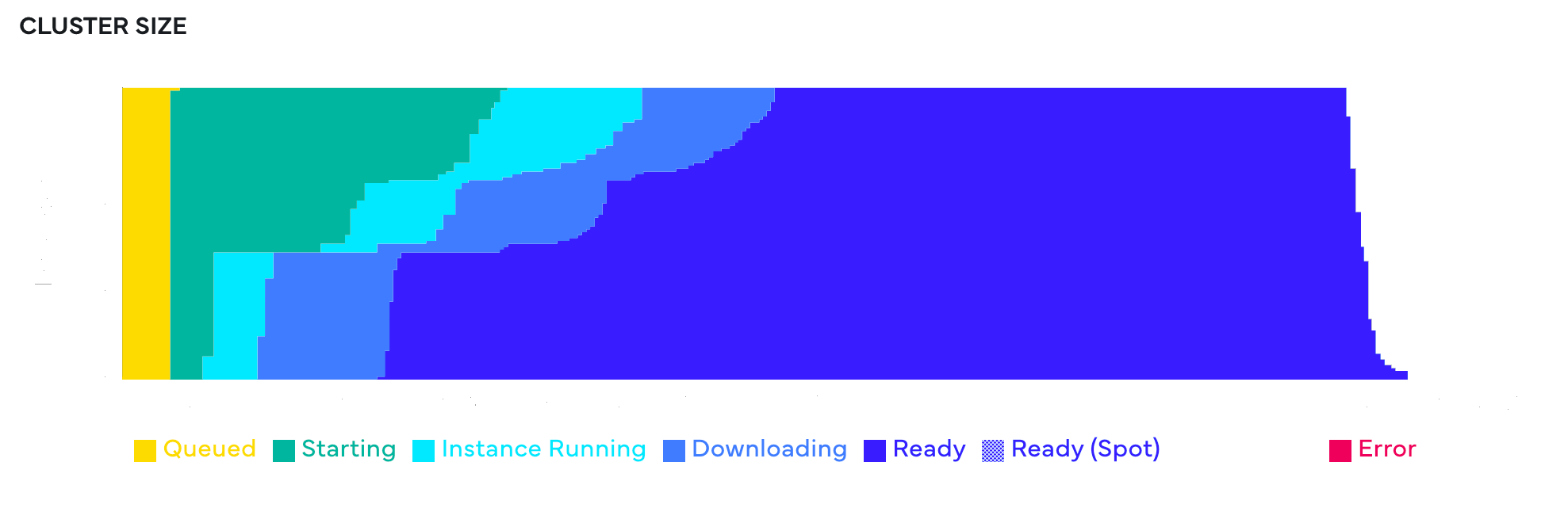

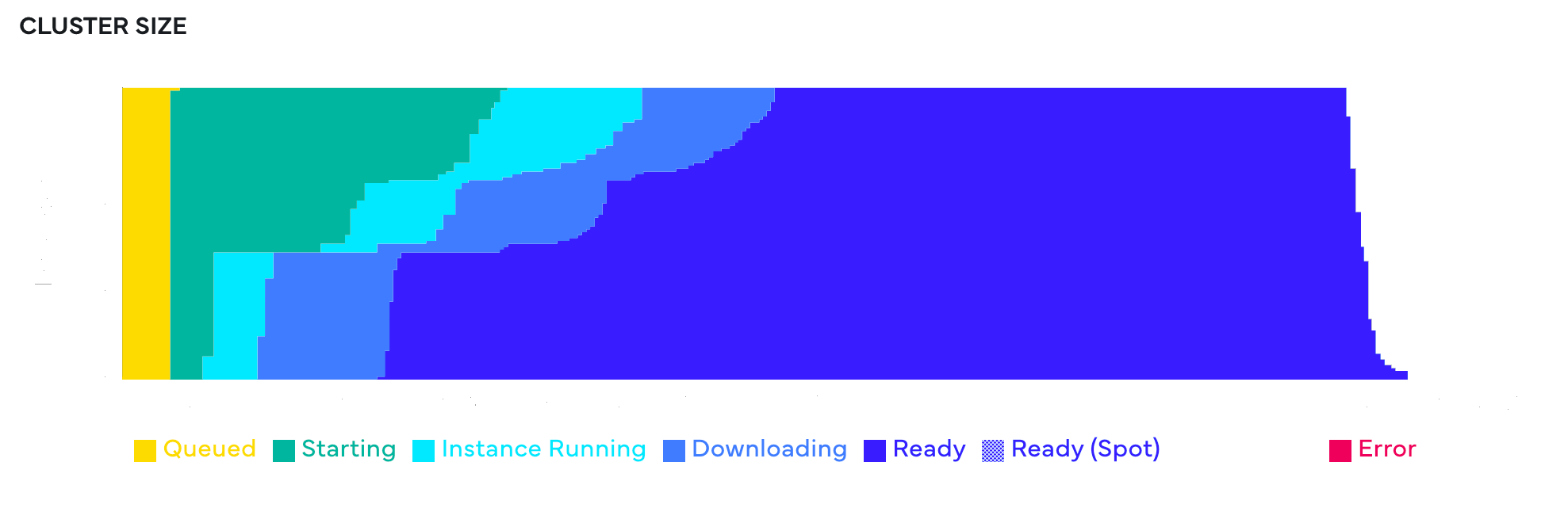

The graph below shows how Coiled rapidly scaled up 100 VMs to handle the processing and then scaled them back down once the work was complete:

This approach provided several key benefits:

- Efficiency: 600x faster than processing serially

- Cost-effectiveness: Only $0.70 for the entire job

- Simplicity: One script and one command to run

- Flexibility: Works with any command-line tool, not just Python

Next Steps#

Here are some ways you could extend this example for your own workflows:

- Do different analyses on the data with different GDAL commands

- Run on different datasets by changing the S3 bucket

- Try different container images for other specialized tools

Get started

Know Python? Come use the cloud. Your first 500 CPU hours per month are on us.

$ pip install coiled

$ coiled quickstart

Grant cloud access? (Y/n): Y

... Configuring ...

You're ready to go. 🎉$ pip install coiled

$ coiled quickstart

Grant cloud access? (Y/n): Y

... Configuring ...

You're ready to go. 🎉